Often when we have to import a lot of assets into our project, Editor processing time to import them could be a real pain. Being able to see which asset category takes the longest to import can help you in:

- Know where to direct your optimization efforts

- Speed up your import times

- Speed up platform switch times

- Improve the performance of your Continuous Integration Pipeline if you’re doing clean nightly builds

There are already Asset store plugins that help you to inspect your Editor import timing, but Unity recently released a free Editor parser. You can download it here: https://github.com/Unity-Javier/SimpleEditorLogParser

To use it, you should import a bunch of assets into your project (textures, audio, scripts, whatever you like) and then make a copy of your Editor.log file, that you can find in C:\Users\username\AppData\Local\Unity\Editor\Editor.log. Paste it in a folder (I’ll use C:\Projects\Game01\). Now let’s parse it with Unity’s SimpleEditorLogParser:

- Download the zip file from Github

- Open the .sln project with VS2019

- If you’re prompted with a warning saying that VS needs some extra module to be installed in order to open this project, do it (this will open VSInstaller automatically)

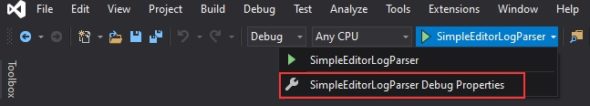

- Go to Debug command line settings:

- add these arguments:

–path C:\Projects\Game01\Editor.log –output \CategorizedLog.csv (change the path with the folder where is the copy of your Editor.log)

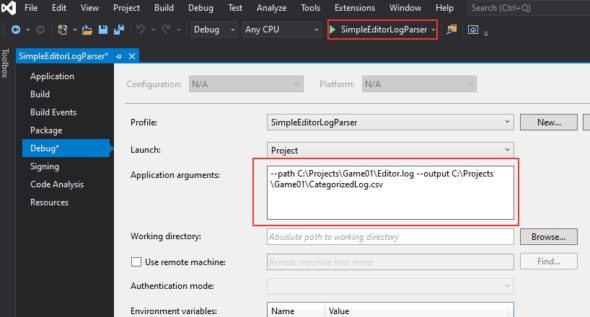

- Save and Run the project: in the same folder, a cvs file should appear:

- I suggest at this time to upload this cvs into your google drive and open it as Google sheet file

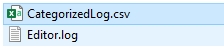

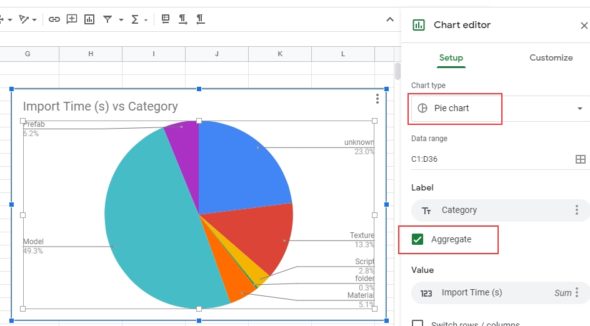

- Select both Category and Import columns, and then insert a Chart

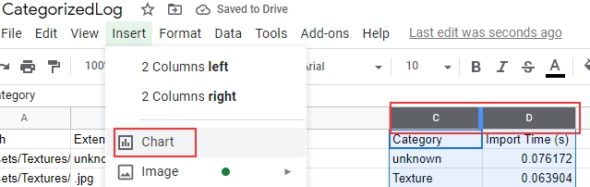

- Click on the created graph, and inside ChartEditor options select Aggregate and PieChart type. And there we go! We have our ImportTime vs Category Pie chart, ready to give use at a glance what we can do to improve import asset times!

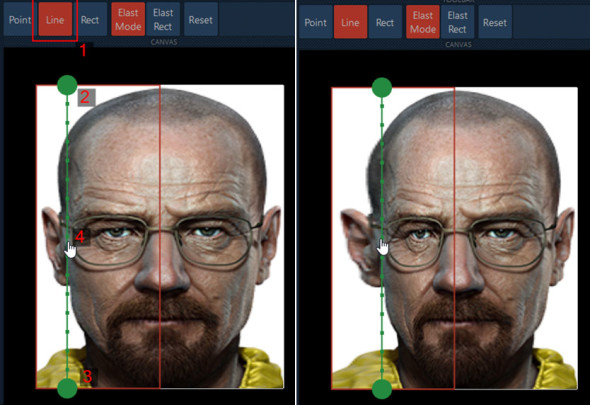

![2016-06-21 01_31_11-MAPIO 2 Lite (64 bits) [DEMO] - new](http://www.inimart.com/inimart/wp-content/uploads/2016-06-21-01_31_11-MAPIO-2-Lite-64-bits-DEMO-new-590x399.png)

![2016-06-21 01_35_34-MAPIO 2 Lite (64 bits) [DEMO] - new](http://www.inimart.com/inimart/wp-content/uploads/2016-06-21-01_35_34-MAPIO-2-Lite-64-bits-DEMO-new-590x384.png)

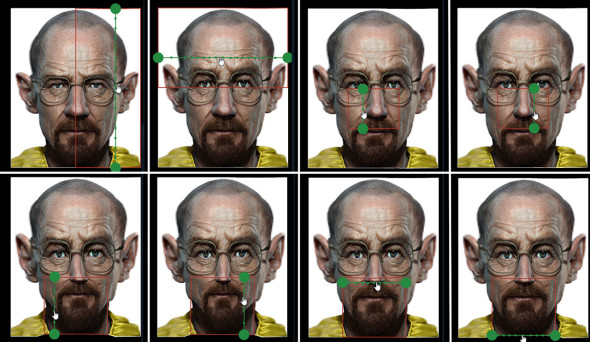

![2016-06-21 18_34_27-MAPIO 2 Lite (64 bits) [DEMO] - new](http://www.inimart.com/inimart/wp-content/uploads/2016-06-21-18_34_27-MAPIO-2-Lite-64-bits-DEMO-new-590x371.png)

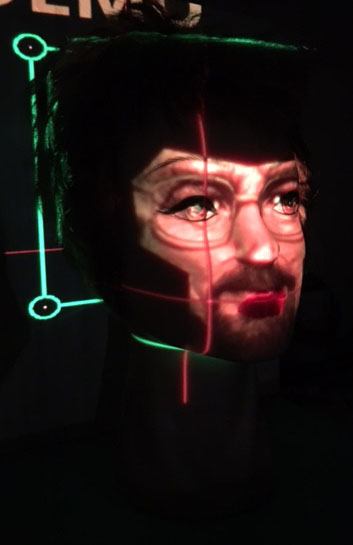

![2016-06-21 22_19_32-MAPIO 2 Lite (64 bits) [DEMO] - new](http://www.inimart.com/inimart/wp-content/uploads/2016-06-21-22_19_32-MAPIO-2-Lite-64-bits-DEMO-new-590x424.png)

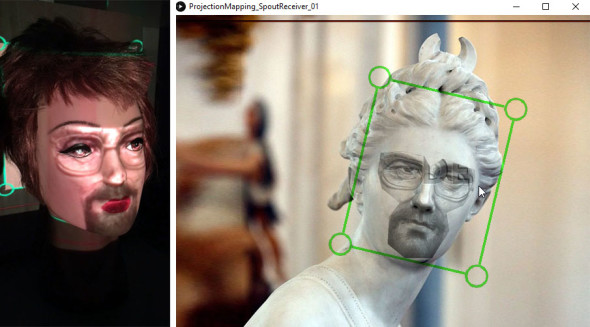

![2016-06-21 22_55_35-MAPIO 2 Lite (64 bits) [DEMO] - new](http://www.inimart.com/inimart/wp-content/uploads/2016-06-21-22_55_35-MAPIO-2-Lite-64-bits-DEMO-new-590x549.png)

![2016-06-21 23_09_05-MAPIO 2 Lite (64 bits) [DEMO] - new](http://www.inimart.com/inimart/wp-content/uploads/2016-06-21-23_09_05-MAPIO-2-Lite-64-bits-DEMO-new-590x273.png)

![2016-06-21 23_10_16-MAPIO 2 Lite (64 bits) [DEMO] - new](http://www.inimart.com/inimart/wp-content/uploads/2016-06-21-23_10_16-MAPIO-2-Lite-64-bits-DEMO-new.png)

![2016-06-21 23_12_15-MAPIO 2 Lite (64 bits) [DEMO] - new](http://www.inimart.com/inimart/wp-content/uploads/2016-06-21-23_12_15-MAPIO-2-Lite-64-bits-DEMO-new.png)

![2016-06-21 23_56_05-MAPIO 2 Lite (64 bits) [DEMO] - projector_00.mio [ ~_Documents_Projects_Projecti](http://www.inimart.com/inimart/wp-content/uploads/2016-06-21-23_56_05-MAPIO-2-Lite-64-bits-DEMO-projector_00.mio-_Documents_Projects_Projecti.png)

![2016-06-22 00_17_50-MAPIO 2 Lite (64 bits) [DEMO] - projector_00.mio [ ~_Documents_Projects_Projecti](http://www.inimart.com/inimart/wp-content/uploads/2016-06-22-00_17_50-MAPIO-2-Lite-64-bits-DEMO-projector_00.mio-_Documents_Projects_Projecti.png)